Service Mesh

Docker containers, Microservice, Kubernetes are already old news — or at least, they’ve become so mainstream that they are no longer cutting-edge technology. I was curious to know what comes after those. If you ever pay close attention to all the latest and greatest technology news, I am sure you will hear a lot about the new “Service Mesh“. I spent some time understanding what the service mesh is all about and it begins with a very basic question “Why do we need service mesh?”. Through this article, I’ll also try to answer some other important questions which might be helpful for you to decide if service mesh is the next mainstream technology, or is it worth introducing service mesh to your application tech stack?

Why do we need Service Mesh? What problem it is trying to solve?

In a microservice architecture, handling service to service communication is challenging and most of the time we depend upon third-party libraries or components to provide functionalities like service discovery, load balancing, circuit breaker, metrics, telemetry, and more.

However, these components need to be configured inside your application code, and based on the language you are using, the implementation will vary a bit. Anytime these external components are upgraded, you need to update your application, verify it, and deploy the changes. This also creates an issue where now your application code is a mixture of business functionalities and these additional configurations. This tight coupling increases the overall application complexity.

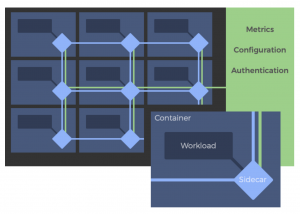

This is where Service Mesh comes to the rescue. It decouples this complexity from your application and puts it in a service proxy called sidecar. Instead of calling services directly over the network, services call their local sidecar proxy, which in turn manages the request on the service’s behalf, thus encapsulating the complexities of the service-to-service exchange. These sidecars can provide you with a bunch of functionalities like

- traffic management,

- circuit breaking,

- service discovery,

- authentication,

- monitoring,

- rate limit

- security,

- and much more

According to Bilgin Ibryam, product manager at RedHat, there is a shift from “smart endpoints, dumb pipes” to “smart sidecars, dumb pipes;” all the intelligence is not in the endpoint but in the sidecar. You have your business logic pod forming the core of the application and the sidecar pod that offers out-of- the-box distributed primitives like handling all network communication challenges.

(A typical Service Mesh Architecture. Business pod talking to local sidecar proxy for service to service communication, service mesh providing various cross- cutting concerns)

Features Provided by Service Mesh

A service mesh implementation will typically offer you one or more following features:

- Normalizes naming and adds logical routing, (e.g., maps the code-level name “user-service” to the platform specific location “AWS-us-east- 1a/prod/users/v4”)

- Provides traffic shaping and traffic shifting

- Maintains load balancing, typically with configurable algorithms

- Provides service release control (e.g., canary releasing and A/B type experimentation)

- Offers per-request routing (e.g., traffic shadowing, fault injection, and debug re-routing)

- Adds baseline reliability, such as health checks, timeouts/deadlines, circuit breaking, and retry (budgets)

- Increases security, via transparent mutual Transport Level Security (TLS) and policies such as Access Control Lists (ACLs)

- Provides additional observability and monitoring, such as top-line metrics (request volume, success rates, and latencies), support for distributed tracing, and the ability to “tap” and inspect real-time service-to-service communication

- Enables platform teams to configure “sane defaults” to protect the system from bad communication

Things to be aware of while introducing service mesh to your application tech stack

A service mesh provides a whole bunch of features to facilitate service to service communication in a microservice architecture. But there is no such thing as “silver bullet” with IT. There is an operational cost for deploying and running a service mesh. Without running any business application of our own, Isto runs about 45 containers to use all its service mesh features which introduce an on-going running cost.

There is a good write up from Michael Kippers here. They looked at using Istio for their main applications. They did a bunch of proof of concept and they deployed it to a production-like environment on what they found was that the cost of running Istio just the extra compute power to run the control plane was doing cost him an extra $50,000 a month Just have the CPU and memory for all the feature of Istio

What Does the Future Hold?

Using Service mesh for Event-Driven Messaging

Popular implementations of service meshes (Istio/Envoy, Linkerd, etc.) currently only cater to the request/response style of synchronous communication between microservices. However, interservice communication takes place over a diverse set of patterns, such as request/ response (HTTP, gRPC, Graph- QL) and event- driven messaging (NATS, Kafka, AMQP) in most pragmatic microservices use cases.

There are two main emerging architectural patterns for implementing messaging support within a service mesh:

The protocol proxy sidecar

The protocol-proxy pattern is built around the concept that all the event-driven communication channels should go through the service-mesh data plane (i.e., the sidecar proxy). To support event-driven messaging protocols such as NATS, Kafka, or AMQP, you need to build a protocol handler/filter specific to the communication protocol and add that to the sidecar proxy. The following Figure shows the typical communication pattern for event-driven messaging with a service mesh.

The Envoy team is currently working on implementing Kafka support for the Envoy proxy based on the above pattern.

The HTTP bridge sidecar

Rather than using a proxy for the event-driven messaging protocol, we can build an HTTP bridge that can translate messages to/from the required messaging protocol. The sidecar proxy is primarily responsible for receiving HTTP requests and translating them into Kafka/ NATS/AMQP/etc. messages and vice versa As shown below in figure.

A Unified, Standard API for Consolidating Service Meshes (SMI)

The Service Mesh Interface (SMI) defines a set of common and portable APIs that aims to provide developers with interoperability across different service mesh technologies including Istio, Linkerd, and Consul Connect. https://smi-spec.io/

First SMI reference implementation is built by solo.io call Service MeshHub https://docs.solo.io/service-mesh-hub/latest/

Getting Started with Service Mesh

Getting Started with Service Mesh

There is an amazing Pluralsight course, Managing App on Kubernetes with Istio, which not only introduces you to the concept of service mesh using Istio, it also talks about cost and how to run Istio in production. For those who are starting to explore service Mesh the course is highly recommended.

Top Service Mesh Technologies

At the heart of many service meshes is Envoy, a general-purpose, open-source proxy used as a sidecar to intercept traffic. Other service meshes utilize a different proxy.

When it comes to service mesh adoption, Istio and Linkerd are more established. Yet many other options exist, including Consul Connect, Kuma, AWS App Mesh, and OpenShift. Below, here are the key features from top service mesh offerings.

Istio

Istio is an extensible open-source service mesh built on Envoy, allowing teams to connect, secure, control, and observe services. Istio was used internally within IBM then open-sourced in 2017, now is framed as a collaboration among Google, IBM, and Lyft.

Linkerd

Linkerd is an “ultralight, security-first service mesh for Kubernetes,” according to the website. It’s a developer favorite, with incredibly easy setup (purportedly 60 seconds to install to a Kubernetes cluster). Instead of Envoy, Linkerd uses a fast and lean Rust proxy called linkerd2-proxy, which was built explicitly for Linkerd.

Consul Connect

Consul Connect, the service mesh from HashiCorp, focuses on routing and segmentation, providing service-to-service networking features through an application-level sidecar proxy.

Something unique about Consul Connect is that you have two proxy options. Connect offers its own built-in layer proxy for testing, but also supports Envoy. Connect emphasizes observability, providing integration with tools to monitor data from sidecar proxies, such as Prometheus. Consul Connect also is flexible for developer needs. For example, it offers many options for registering services: from an orchestrator, with configuration files, via API, or via command-line interface (CLI).

Kuma

Kuma, from Kong, is a platform-agnostic control plane built on Envoy. Kuma provides networking features to secure, observe, route, and enhance connectivity between services. Kuma supports Kubernetes in addition to virtual machines.

What’s interesting about Kuma is that an enterprise can operate and control multiple isolated meshes from a unified control plane. This ability could be beneficial to high-security use cases that require segmentation and centralized control

Maesh

Maesh, the container-native service mesh by Containous, built itself as lightweight and more straightforward to use than other service meshes on the market. While other meshes build on top of Envoy, Maesh adopts Traefik, an open-source reverse proxy, and load balancer.

Instead of adopting a sidecar container format, Maesh uses proxy endpoints for each node. Doing so makes Maesh more non-invasive than other meshes, since it does not edit Kubernetes objects or modify traffic without opt-in. Maesh supports a couple of configuration options: annotations on user service objects as well as Service Mesh Interface SMI objects.

Indeed, its support for SMI, a new standard service mesh specification format, is one unique trait of Maesh. If SMI adoption increases throughout the industry, it could offer extensibility benefits and potentially reduce vendor lock-in concerns.

AWS App Mesh

Amazon Web Services’ App Mesh provides “application-level networking for all your services.” It manages all network traffic for services and uses the open- source Envoy proxy to control traffic into and out of a service’s containers. AWS App Mesh supports HTTP/2 gRPC services.

AWS App Mesh could be a good service mesh option for companies already married to the AWS infrastructure for their container platforms.

OpenShift Service Mesh by Red Hat

OpenShift Service Mesh builds on top of open-source Istio, bringing the Istio control and data plane features. OpenShift enhances Istio with tracing and visibility features powered by two open-source tools. OpenShift uses Jaeger for distributed tracing, permitting better tracking of how requests are handled between services.

OpenShift also uses Kiali for added observability into microservices configuration, traffic monitoring, and tracing analysis.

Conclusion

Looking at the operation and running cost of the service mesh, it makes sense to use service mesh where you are running tons of services in production. Also, use service mesh if your application already has or planning to implement features provided by service mesh.

References:

https://www.infoq.com/news/2020/03/multi-runtime-microservices/

https://dzone.com/ar ticles/the-rise-of-service-mesh-architecture

https://techbeacon.com/app-dev-testing/9-open-source-service-meshes- compared

https://glasnostic.com/blog/microservices-control-data-planes-differences- istio-linkerd