Blockchain technology, with its decentralized and immutable nature, has gained significant traction in various industries for its potential to revolutionize processes and transactions. Hyperledger Fabric stands out as a popular permissioned blockchain framework due to its modular architecture, flexibility, and enterprise-grade features.

In this guide, I’ll walk you through the process of setting up a multi-cluster, multi-organization Hyperledger Fabric network, with detailed steps and explanations along the way.

Understanding Multi-Cluster Hyperledger Fabric Architecture

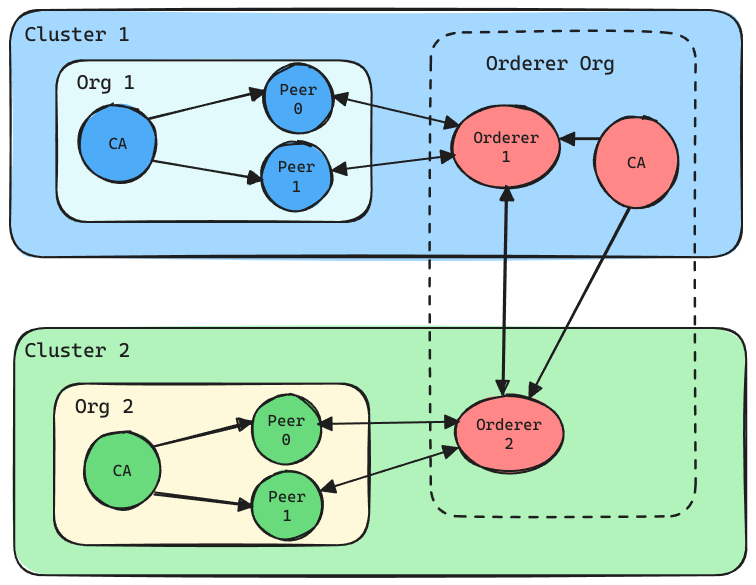

Before delving into the setup process, let’s grasp the architecture we’re aiming to achieve. In our scenario, we’re designing a multi-cluster Hyperledger Fabric network to facilitate collaboration between multiple organizations while maintaining isolation and scalability. Here’s a brief overview:

Organizations (Orgs): We have two distinct organizations, Org1 and Org2. Each organization operates independently and has its own Certificate Authority (CA) responsible for managing cryptographic identities.

Clusters: Org1 and Org2 are deployed on separate Kubernetes clusters to ensure fault tolerance and resource isolation. Each cluster hosts its own set of peers and CA.

Orderers: The orderer service, responsible for reaching consensus on transactions, is distributed across a separate organization. We have two orderers, Orderer1 and Orderer2, deployed in separate clusters for redundancy and high availability.

This architecture ensures that each organization has control over its resources while facilitating cross-organization communication through shared channels and smart contracts.

Tools Used

To implement this architecture, we leverage the following tools:

Amazon Elastic Kubernetes Service (EKS): EKS simplifies the deployment, management, and scaling of Kubernetes clusters on Amazon Web Services (AWS), providing a reliable foundation for hosting our Fabric network.

Hlf-Operator: Hlf-Operator is a Kubernetes operator specifically designed to manage Hyperledger Fabric networks. It abstracts many complex steps in deploying and managing the fabric network.

Steps to Setup the Network

Now that we understand the architecture and tools involved, let’s examine the step-by-step setup process.

Step 1: Setup EKS Cluster

The first step is to create the Kubernetes clusters on which we’ll deploy our Fabric network components. We’ll use Amazon EKS for this purpose. Here’s an outline of the process:

Cluster Configuration: Define the cluster configuration, including parameters like instance types, number of instances, availability zones etc.

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: cluster1 # cluster2 for 2nd cluster

region: us-east-1

version: "1.29"

kubernetesNetworkConfig:

ipFamily: IPv4

nodeGroups:

- name: ng-1

instanceType: t3.medium

desiredCapacity: 2

ssh:

publicKeyPath: ~/infra/key.pub

availabilityZones: ["us-east-1a", "us-east-1b"]

iam:

withOIDC: true

serviceAccounts:

- metadata:

name: ebs-csi-controller-sa

namespace: kube-system

wellKnownPolicies:

ebsCSIController: true

addons:

- name: vpc-cni

attachPolicyARNs:

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

- name: coredns

version: latest

- name: kube-proxy

version: latest

- name: aws-ebs-csi-driver

wellKnownPolicies:

ebsCSIController: true

Cluster Creation: Use eksctl, a command-line tool for managing EKS clusters, to create the clusters based on the configuration.

# Create cluster1 eksctl create cluster -f cluster1_config.yaml # Create cluster2 eksctl create cluster -f cluster2_config.yaml

Kubeconfig Setup: Create kubeconfig files to enable access to the newly created clusters.

# Create cluster1 kubeconfig

aws eks update-kubeconfig --region us-east-1 --name cluster1 --kubeconfig ~/.kube/cluster1-config

# Create cluster2 kubeconfig

aws eks update-kubeconfig --region us-east-1 --name cluster2 --kubeconfig ~/.kube/cluster2-config

Step 2: Install Hlf-Operator and Istio

Hlf-Operator simplifies the management of Fabric resources on Kubernetes, while Istio provides a service mesh for handling traffic and communication between pods. Here’s how to set them up:

Hlf-Operator Installation: Add the Hlf-Operator Helm repository and install the operator on both clusters.

Istio Installation: Install Istio on both clusters to enable features like load balancing and traffic management.

# Cluster 1 export KUBECONFIG=~/.kube/cluster1-config -------------------------------------------------------- helm repo add kfs https://kfsoftware.github.io/hlf-helm-charts --force-update helm install hlf-operator --version=1.10.0 -- kfs/hlf-operator istioctl install -y

# Cluster 2 export KUBECONFIG=~/.kube/cluster2-config -------------------------------------------------------- helm repo add kfs https://kfsoftware.github.io/hlf-helm-charts --force-update helm install hlf-operator --version=1.10.0 -- kfs/hlf-operator istioctl install -y

Istio will provide a URL to the cluster. Run the following command and get the value provided under EXTERNAL-IP column. We need this URL while configuring the DNS.

kubectl get svc istio-ingressgateway -n istio-system

Step 3: Configure DNS

DNS configuration is crucial for enabling communication between different components of the Fabric network. We’ll set up DNS records to map service endpoints to domain names, allowing for seamless interaction. This involves:

Hosted Zone Setup: Configure DNS records in AWS Route 53 for the domain associated with your Fabric network.

Record Creation: Create records for each service, including peers, CAs, and orderers, associating them with their respective domain names.

Configure the records to point to the cluster external URL. Example – For peer 0 of org1, Set subdomain as peer0.org1.fabric Select record type as CNAME and Value as cluster 1 external URL (The value we got above in EXTERNAL-IP column).

Configure the following records:

Org 1:

Peer0 - peer0.org1.fabric.example.com Peer1 - peer1.org1.fabric.example.com org1-ca - ca.org1.fabric.example.com

Org 2:

Peer0 - peer0.org2.fabric.example.com Peer1 - peer1.org2.fabric.example.com org2-ca - ca.org2.fabric.example.com

Orderer:

Orderer1 - ord1.orderer.fabric.example.com Orderer1 admin host - admin-ord1.orderer.fabric.example.com Orderer2 - ord2.orderer.fabric.example.com Orderer2 admin host - admin-ord2.orderer.fabric.example.com Orderer-ca - ca.orderer.fabric.example.com

Step 4: Setup Organization CAs and Peers

With the infrastructure in place, we can now deploy the Fabric network components for each organization. This includes Certificate Authorities, Peers, Orderers deployment.

Certificate Authorities(CA) – CA is responsible for issuing cryptographic identities.

Peers – Peers enable transaction processing and chaincode execution.

Org1 Setup

export KUBECONFIG=~/.kube/cluster1-config

------------------------------------------------------

# Create Org1 CA

kubectl hlf ca create \

--storage-class gp2 \

--capacity 1Gi \

--name org1-ca \

--hosts ca.org1.fabric.example.com \

--enroll-id enroll \

--enroll-pw enrollpw \

--output > resources/org1/ca.yaml

kubectl apply -f resources/org1/ca.yaml

-------------------------------------------------------

# Register Peer Identity

kubectl hlf ca register \

--name org1-ca \

--user peer \

--secret peerpw \

--type peer \

--enroll-id enroll \

--enroll-secret enrollpw \

--mspid Org1MSP

# Deploy Org1 Peer0

kubectl hlf peer create \

--storage-class gp2 \

--enroll-id peer \

--enroll-pw peerpw \

--mspid Org1MSP \

--capacity 2Gi \

--name peer0-org1 \

--ca-name org1-ca.default \

--hosts peer0.org1.fabric.example.com \

--istio-ingressgateway ingressgateway \

--istio-port 443 \

--output > resources/org1/peer0.yaml

kubectl apply -f resources/org1/peer0.yaml

-------------------------------------------------------

# Deploy Org1 Peer1

kubectl hlf peer create \

--storage-class gp2 \

--enroll-id peer \

--enroll-pw peerpw \

--mspid Org1MSP \

--capacity 2Gi \

--name peer1-org1 \

--ca-name org1-ca.default \

--hosts peer1.org1.fabric.example.com \

--istio-ingressgateway ingressgateway \

--istio-port 443 \

--output > resources/org1/peer1.yaml

kubectl apply -f resources/org1/peer1.yaml

---------------------------------------------------------

# Wait for CA, Peers deployment

kubectl wait --timeout 180s --for condition=Running fabriccas.hlf.kungfusoftware.es --all

kubectl wait --timeout 180s --for condition=Running fabricpeers.hlf.kungfusoftware.es --all

-------------------------------------------------------

Note: If you get errors related to strict decoding error while applying the kubectl files, delete the fields(mostly fields with null value) specified in errors from the yaml file.

Org2 Setup

export KUBECONFIG=~/.kube/cluster2-config

-----------------------------------------------------

# Deploy Org2 CA

kubectl hlf ca create \

--storage-class gp2 \

--capacity 1Gi \

--name org2-ca \

--hosts ca.org2.fabric.example.com \

--enroll-id enroll \

--enroll-pw enrollpw \

--output > resources/org2/ca.yaml

kubectl apply -f resources/org2/ca.yaml

-----------------------------------------------------

# Register Org2 Peer Identity

kubectl hlf ca register \

--name org2-ca \

--user peer \

--secret peerpw \

--type peer \

--enroll-id enroll \

--enroll-secret enrollpw \

--mspid Org2MSP

# Deploy Org2 Peer0

kubectl hlf peer create \

--storage-class gp2 \

--enroll-id peer \

--enroll-pw peerpw \

--mspid Org2MSP \

--capacity 2Gi \

--name peer0-org2 \

--ca-name org2-ca.default \

--hosts peer0.org2.fabric.example.com \

--istio-ingressgateway ingressgateway \

--istio-port 443 \

--output > resources/org2/peer0.yaml

kubectl apply -f resources/org2/peer0.yaml

-----------------------------------------------------

# Deploy Org2 Peer1

kubectl hlf peer create \

--storage-class gp2 \

--enroll-id peer \

--enroll-pw peerpw \

--mspid Org2MSP \

--capacity 2Gi \

--name peer1-org2 \

--ca-name org2-ca.default \

--hosts peer1.org2.fabric.example.com \

--istio-ingressgateway ingressgateway \

--istio-port 443 \

--output > resources/org2/peer1.yaml

kubectl apply -f resources/org2/peer1.yaml

-----------------------------------------------------

# Wait for CA, Peers deployment

kubectl wait --timeout 180s --for condition=Running fabriccas.hlf.kungfusoftware.es --all

kubectl wait --timeout 180s --for condition=Running fabricpeers.hlf.kungfusoftware.es --all

-----------------------------------------------------

Step 5: Create Orderer Organization

The orderer organization plays a critical role in achieving consensus among network participants. We’ll set up the orderer components, including:

CA Creation: Deploy the CA for the orderer organization to manage cryptographic identities.

Orderer Deployment: Deploy multiple orderers, distributed across clusters for fault tolerance and scalability.

Create Orderer CA

export KUBECONFIG=~/.kube/cluster1-config

---------------------------------------------------------

# Deploy Orderer CA

kubectl hlf ca create \

--storage-class gp2 \

--capacity 1Gi \

--name ord-ca \

--enroll-id enroll \

--enroll-pw enrollpw \

--hosts ca.orderer.fabric.example.com \

--output > resources/orderer/ca.yaml

kubectl apply -f resources/orderer/ca.yaml

Deploy Orderer1

export KUBECONFIG=~/.kube/cluster1-config

---------------------------------------------------------

# Register Orderer1 Identity

kubectl hlf ca register \

--name ord-ca \

--user orderer1 \

--secret orderer1pw \

--type orderer \

--enroll-id enroll \

--enroll-secret enrollpw \

--mspid OrdererMSP

# Deploy Orderer1

kubectl hlf ordnode create \

--storage-class gp2 \

--enroll-id orderer1 \

--mspid OrdererMSP \

--enroll-pw orderer1pw \

--capacity 1Gi \

--name ord1-node \

--ca-name ord-ca.default \

--hosts ord1.orderer.fabric.example.com \

--admin-hosts admin-ord1.orderer.fabric.example.com \

--istio-ingressgateway ingressgateway \

--istio-port 443 \

--output > resources/orderer/orderer1.yaml

kubectl apply -f resources/orderer/orderer1.yaml

Deploy Orderer2

Create Orderer2 User

# As orderer CA is in cluster 1, set kubeconfig to cluster1.

export KUBECONFIG=~/.kube/cluster1-config

------------------------------------------------------------

# Register Orderer2 Identity

kubectl hlf ca register \

--name ord-ca \

--user orderer2 \

--secret orderer2pw \

--type orderer \

--enroll-id enroll \

--enroll-secret enrollpw \

--mspid OrdererMSP

Generate Orderer2 configuration

Currently, the HLF Operator requires the CA to be on the same cluster to generate the configuration. To bypass this limitation, we will use the Org2 CA server to generate the configuration and then manually modify it before deployment.

export KUBECONFIG=~/.kube/cluster2-config

----------------------------------------------------------

# Deploy Orderer2

kubectl hlf ordnode create \

--storage-class gp2 \

--enroll-id orderer2 \

--mspid OrdererMSP \

--enroll-pw orderer2pw \

--capacity 1Gi \

--name ord2-node \

--ca-name org2-ca.default \

--hosts ord2.orderer.fabric.example.com \

--admin-hosts admin-ord2.orderer.fabric.example.com \

--istio-ingressgateway ingressgateway \

--istio-port 443 \

--output > resources/orderer/orderer2.yaml

The following changes are required in the file resources/orderer/orderer2.yaml:

1. Set spec.secret.enrollment.component.cahost to "ca.orderer.fabric.example.com"

2. Set spec.secret.enrollment.tls.cahost to "ca.orderer.fabric.example.com"

3. Add "ca.orderer.fabric.example.com" to spec.secret.enrollment.tls.csr.hosts

4. Copy thespec.secret.enrollment.component.catls.cacert value from resources/orderer/orderer1.yaml and paste it into these properties in resources/orderer/orderer2.yaml:

a. spec.secret.enrollment.component.catls.cacert

b. spec.secret.enrollment.tls.catls.cacert

Deploy Orderer2

export KUBECONFIG=~/.kube/cluster2-config kubectl apply -f resources/orderer/orderer2.yaml

Wait for Orderer Org CA and orderers deployment

export KUBECONFIG=~/.kube/cluster1-config kubectl wait --timeout 180s --for condition=Running fabriccas.hlf.kungfusoftware.es --all kubectl wait --timeout 180s --for condition=Running fabricorderernodes.hlf.kungfusoftware.es --all

export KUBECONFIG=~/.kube/cluster2-config kubectl wait --timeout 180s --for condition=Running fabricorderernodes.hlf.kungfusoftware.es --all

At this stage, we should have all the CAs, peers, orderers up and running. Now we need to create a channel with both the orgs and peers.

Step 6: Create Channel

Channels in Hyperledger Fabric facilitate private communication and transaction flow between specific network participants. Here’s how to create and configure a channel:

Identities Secret Creation: Create a secret containing cryptographic identities for managing the channel.

We need admin identities to create the channel. Let first register the identities.

Register and enrolling OrdererMSP identity

export KUBECONFIG=~/.kube/cluster1-config

----------------------------------------------------------

# Register Orderer Admin

kubectl hlf ca register --name=ord-ca --user=admin --secret=adminpw \

--type=admin --enroll-id enroll --enroll-secret=enrollpw --mspid=OrdererMSP

# Enroll Orderer Admin

kubectl hlf ca enroll --name=ord-ca --namespace=default \

--user=admin --secret=adminpw --mspid OrdererMSP \

--ca-name tlsca --output orderermsp.yaml

Register and enrolling Org1MSP Orderer identity

export KUBECONFIG=~/.kube/cluster1-config

----------------------------------------------------------

kubectl hlf ca register --name=org1-ca --user=admin --secret=adminpw \

--type=admin --enroll-id enroll --enroll-secret=enrollpw --mspid=Org1MSP

kubectl hlf ca enroll --name=org1-ca --namespace=default \

--user=admin --secret=adminpw --mspid Org1MSP \

--ca-name tlsca --output org1msp-tlsca.yaml

Register and enrolling Org1MSP identity

export KUBECONFIG=~/.kube/cluster1-config

kubectl hlf ca register --name=org1-ca --namespace=default --user=admin --secret=adminpw \

--type=admin --enroll-id enroll --enroll-secret=enrollpw --mspid=Org1MSP

kubectl hlf ca enroll --name=org1-ca --namespace=default \

--user=admin --secret=adminpw --mspid Org1MSP \

--ca-name ca --output org1msp.yaml

kubectl hlf identity create --name org1-admin --namespace default \

--ca-name org1-ca --ca-namespace default \

--ca ca --mspid Org1MSP --enroll-id admin --enroll-secret adminpw

Register and enrolling Org2MSP identity

export KUBECONFIG=~/.kube/cluster2-config

kubectl hlf ca register --name=org2-ca --namespace=default --user=admin --secret=adminpw \

--type=admin --enroll-id enroll --enroll-secret=enrollpw --mspid=Org2MSP

kubectl hlf ca enroll --name=org2-ca --namespace=default \

--user=admin --secret=adminpw --mspid Org2MSP \

--ca-name ca --output org2msp.yaml

kubectl hlf identity create --name org2-admin --namespace default \

--ca-name org2-ca --ca-namespace default \

--ca ca --mspid Org2MSP --enroll-id admin --enroll-secret adminpw

Create the secret

export KUBECONFIG=~/.kube/cluster1-config kubectl create secret generic wallet --namespace=default --from-file=org1msp.yaml=$PWD/org1msp.yaml --from-file=orderermsp.yaml=$PWD/orderermsp.yaml

export KUBECONFIG=~/.kube/cluster2-config kubectl create secret generic wallet --namespace=default --from-file=org2msp.yaml=$PWD/org2msp.yaml --from-file=orderermsp.yaml=$PWD/orderermsp.yaml

Channel Initialization: Initialize the main channel, specifying participating organizations, orderers, and channel policies.

Create main channel

export KUBECONFIG=~/.kube/cluster1-config

export IDENT_8=$(printf "%8s" "")

export ORDERER_TLS_CERT=$(kubectl get fabriccas ord-ca -o=jsonpath='{.status.tlsca_cert}' | sed -e "s/^/${IDENT_8}/" )

export ORDERER0_TLS_CERT=$(kubectl get fabricorderernodes ord1-node -o=jsonpath='{.status.tlsCert}' | sed -e "s/^/${IDENT_8}/" )

export KUBECONFIG=~/.kube/cluster2-config

export ORG2_SIGN_CERT=$(kubectl get fabriccas org1-ca -o=jsonpath='{.status.ca_cert}')

export ORG2_TLS_CERT=$(kubectl get fabriccas org1-ca -o=jsonpath='{.status.tlsca_cert}')

export ORDERER1_TLS_CERT=$(kubectl get fabricorderernodes ord2-node -o=jsonpath='{.status.tlsCert}' | sed -e "s/^/${IDENT_8}/" )

export KUBECONFIG=~/.kube/cluster1-config

kubectl apply -f - <<EOF

apiVersion: hlf.kungfusoftware.es/v1alpha1

kind: FabricMainChannel

metadata:

name: mychannel

spec:

name: mychannel

adminOrdererOrganizations:

- mspID: OrdererMSP

adminPeerOrganizations:

- mspID: Org1MSP

channelConfig:

application:

acls: null

capabilities:

- V2_0

policies: null

capabilities:

- V2_0

orderer:

batchSize:

absoluteMaxBytes: 1048576

maxMessageCount: 10

preferredMaxBytes: 524288

batchTimeout: 2s

capabilities:

- V2_0

etcdRaft:

options:

electionTick: 10

heartbeatTick: 1

maxInflightBlocks: 5

snapshotIntervalSize: 16777216

tickInterval: 500ms

ordererType: etcdraft

policies: null

state: STATE_NORMAL

policies: null

externalOrdererOrganizations: []

peerOrganizations:

- mspID: Org1MSP

caName: "org1-ca"

caNamespace: "default"

identities:

OrdererMSP:

secretKey: orderermsp.yaml

secretName: wallet

secretNamespace: default

Org1MSP:

secretKey: org1msp.yaml

secretName: wallet

secretNamespace: default

externalPeerOrganizations:

- mspID: Org2MSP

tlsRootCert: |

${ORG2_TLS_CERT}

signRootCert: |

${ORG2_SIGN_CERT}

ordererOrganizations:

- caName: "ord-ca"

caNamespace: "default"

externalOrderersToJoin:

- host: admin-ord2.orderer.fabric.example.com

port: 443

mspID: OrdererMSP

ordererEndpoints:

- ord1.orderer.fabric.example.com:443

- ord2.orderer.fabric.example.com:443

orderersToJoin:

- name: ord1-node

namespace: default

orderers:

- host: ord1.orderer.fabric.example.com

port: 443

tlsCert: |-

${ORDERER0_TLS_CERT}

- host: ord2.orderer.fabric.example.com

port: 443

tlsCert: |-

${ORDERER1_TLS_CERT}

EOF

Peer Joining: Join peers from Org1 and Org2 to the channel, establishing their membership and enabling transaction endorsement.

Create Follower Channel In Org1

export KUBECONFIG=~/.kube/cluster1-config

export IDENT_8=$(printf "%8s" "")

export ORDERER0_TLS_CERT=$(kubectl get fabricorderernodes ord1-node -o=jsonpath='{.status.tlsCert}' | sed -e "s/^/${IDENT_8}/" )

kubectl apply -f - <<EOF

apiVersion: hlf.kungfusoftware.es/v1alpha1

kind: FabricFollowerChannel

metadata:

name: mychannel-org1msp

spec:

anchorPeers:

- host: peer0.org1.fabric.example.com

port: 443

hlfIdentity:

secretKey: org1msp.yaml

secretName: wallet

secretNamespace: default

mspId: Org1MSP

name: mychannel

externalPeersToJoin: []

orderers:

- certificate: |

${ORDERER0_TLS_CERT}

url: grpcs://ord1-node.default:7050

peersToJoin:

- name: peer0-org1

namespace: default

- name: peer1-org1

namespace: default

EOF

Create Follower Channel In Org2

export KUBECONFIG=~/.kube/cluster2-config

export IDENT_8=$(printf "%8s" "")

export ORDERER1_TLS_CERT=$(kubectl get fabricorderernodes ord2-node -o=jsonpath='{.status.tlsCert}' | sed -e "s/^/${IDENT_8}/" )

kubectl apply -f - <<EOF

apiVersion: hlf.kungfusoftware.es/v1alpha1

kind: FabricFollowerChannel

metadata:

name: mychannel-org2msp

spec:

anchorPeers:

- host: peer0.org2.fabric.example.com

port: 443

hlfIdentity:

secretKey: org2msp.yaml

secretName: wallet

secretNamespace: default

mspId: Org2MSP

name: mychannel

externalPeersToJoin: []

orderers:

- certificate: |

${ORDERER1_TLS_CERT}

url: grpcs://ord2-node.default:7050

peersToJoin:

- name: peer0-org2

namespace: default

- name: peer1-org2

namespace: default

EOF

Step 7: Install Chaincode

Chaincode, also known as smart contracts, governs the logic of transactions on the Fabric network. We’ll install, approve, and commit the chaincode on peers from both organizations:

Package Chaincode As A Service

export CHAINCODE_NAME=basictransfer

export CHAINCODE_LABEL=basictransfer_1.0

cat << METADATA-EOF > "metadata.json"

{

"type": "ccaas",

"label": "${CHAINCODE_LABEL}"

}

METADATA-EOF

cat > "connection.json" <<CONN_EOF

{

"address": "${CHAINCODE_NAME}:7052",

"dial_timeout": "10s",

"tls_required": false

}

CONN_EOF

tar cfz code.tar.gz connection.json

tar cfz basictransfer-external.tgz metadata.json code.tar.gz

Install Chaincode – Org1

Prepare connection string

export KUBECONFIG=~/.kube/cluster1-config kubectl hlf inspect --output org1.yaml -o Org1MSP -o OrdererMSP kubectl hlf ca register --name=org1-ca --user=admin --secret=adminpw --type=admin --enroll-id enroll --enroll-secret=enrollpw --mspid Org1MSP kubectl hlf ca enroll --name=org1-ca --user=admin --secret=adminpw --mspid Org1MSP --ca-name ca --output peer-org1.yaml kubectl hlf utils adduser --userPath=peer-org1.yaml --config=org1.yaml --username=admin --mspid=Org1MSP

Install, Deploy, Approve, Commit Chaincode – Org1

Refer this page for creating the chaincode docker image which will be used to deploy the smart contract as a service.

export KUBECONFIG=~/.kube/cluster1-config

export SEQUENCE=1

export VERSION="1.0"

export LANGUAGE=golang

export PACKAGE_ID=$(kubectl hlf chaincode calculatepackageid --path=./basictransfer-external.tgz --language=$LANGUAGE --label=$CHAINCODE_LABEL)

echo "PACKAGE_ID=$PACKAGE_ID"

# Install - peer0

kubectl hlf chaincode install --path=./basictransfer-external.tgz \

--config=org1.yaml --language=$LANGUAGE --label=$CHAINCODE_LABEL --user=admin --peer=peer0-org1.default

# Install - peer1

kubectl hlf chaincode install --path=./basictransfer-external.tgz \

--config=org1.yaml --language=$LANGUAGE --label=$CHAINCODE_LABEL --user=admin --peer=peer1-org1.default

# Deploy

kubectl hlf externalchaincode sync --image=<username>/chaincode:latest \

--name=$CHAINCODE_NAME \

--namespace=default \

--package-id=$PACKAGE_ID \

--tls-required=false \

--replicas=1

# Approve

kubectl hlf chaincode approveformyorg --config=org1.yaml --user=admin --peer=peer0-org1.default \

--package-id=$PACKAGE_ID \

--version "$VERSION" --sequence "$SEQUENCE" --name=basictransfer \

--policy='OR('\''Org1MSP.member'\'','\''Org2MSP.member'\'')' --channel=mychannel

# Commit

kubectl hlf chaincode commit --config=org1.yaml --user=admin --mspid=Org1MSP \

--version "$VERSION" --sequence "$SEQUENCE" --name=basictransfer \

--policy='OR('\''Org1MSP.member'\'','\''Org2MSP.member'\'')' --channel=mychannel

Install Chaincode – Org2

Prepare connection string

export KUBECONFIG=~/.kube/cluster1-config kubectl hlf inspect --output org1.yaml -o Org1MSP -o OrdererMSP kubectl hlf ca register --name=org1-ca --user=admin --secret=adminpw --type=admin --enroll-id enroll --enroll-secret=enrollpw --mspid Org1MSP kubectl hlf ca enroll --name=org1-ca --user=admin --secret=adminpw --mspid Org1MSP --ca-name ca --output peer-org1.yaml kubectl hlf utils adduser --userPath=peer-org1.yaml --config=org1.yaml --username=admin --mspid=Org1MSP

Install & Deploy Chaincode – Org2

export KUBECONFIG=~/.kube/cluster2-config

export SEQUENCE=1

export VERSION="1.0"

export LANGUAGE=golang

export PACKAGE_ID=$(kubectl hlf chaincode calculatepackageid --path=./basictransfer-external.tgz --language=$LANGUAGE --label=$CHAINCODE_LABEL)

echo "PACKAGE_ID=$PACKAGE_ID"

# Install - peer0

kubectl hlf chaincode install --path=./basictransfer-external.tgz --config=org2.yaml --language=$LANGUAGE --label=$CHAINCODE_LABEL --user=admin --peer=peer0-org2.default

# Install - peer1

kubectl hlf chaincode install --path=./basictransfer-external.tgz --config=org2.yaml --language=$LANGUAGE --label=$CHAINCODE_LABEL --user=admin --peer=peer1-org2.default

# Deploy

kubectl hlf externalchaincode sync --image=<username>/chaincode:latest --name=$CHAINCODE_NAME --namespace=default --package-id=$PACKAGE_ID --tls-required=false --replicas=1

# Approve

kubectl hlf chaincode approveformyorg --config=org2.yaml --user=admin --peer=peer0-org2.default --package-id=$PACKAGE_ID --version "$VERSION" --sequence "$SEQUENCE" --name=basictransfer --policy='OR('\''Org1MSP.member'\'','\''Org2MSP.member'\'')' --channel=mychannel

# Commit

kubectl hlf chaincode commit --config=org2.yaml --user=admin --mspid=Org2MSP --version "$VERSION" --sequence "$SEQUENCE" --name=basictransfer --policy='OR('\''Org1MSP.member'\'','\''Org2MSP.member'\'')' --channel=mychannel

Step 8: Verify Network Functionality

Once the network is set up and components are deployed, it’s essential to verify its functionality:

Transaction Testing: Initiate transactions and smart contract invocations to ensure proper endorsement, validation, and commitment.

Conclusion

Setting up a multi-cluster multi-organization Hyperledger Fabric network involves careful planning, configuration, and coordination of various components and tools. By following the steps outlined in this guide, you can establish a robust and scalable blockchain infrastructure capable of supporting diverse use cases and applications across industries.

Future Work

1. Automate the network setup process by developing scripts.

2. Expand the setup to support multi-cloud clusters—for example, deploying Org1 on AWS, Org2 on GCP, and Org3 on Azure.