March opened with a bang for DeepSeek. Marc Andreesen has described it as the Sputnik Moment for American AI companies. After completely rattling the AI race in late January, on 1st March the Chinese startup revealed that the theoretical cost-profit ratio of its V3 and R1 is up to 545% per day. Although the actual revenue they have cautioned would be significantly lower but the news is set to unsettle AI stocks further across the world. Unlike OpenAI, DeepSeek’s R1 LLM model is open-source and it is its major tailwind.

Open-source AI can disrupt the tech ecosystem because it favors rapid innovation through collaboration. It democratizes access to AI technology by allowing anyone to contribute and use it. This promotes transparency and trust by enabling scrutiny of the code, and ultimately, it accelerates the development of better AI solutions by leveraging the collective expertise of a global community.

Open-source AI like DeepSeek is more accessible to startups and individual developers and reduces costs. Startups, especially at an early stage, work with limited resources. DeepSeek is a great option for innovation through community collaboration. It accelerates startup product development by leveraging existing models, promotes transparency and trust with accessible code, and is customizable to meet specific business needs. However, DeepSeek’s deployment requires a thorough knowledge of the LLM.

What is DeepSeek?

DeepSeek is a Chinese AI development firm. It released its R1 LLM model on 20 January, 2025 and disrupted the market as its development cost is only 3%-5% of what its rivals like OpenAI and Google have incurred while developing their own. The DeepSeek AI assistant, a chatbot interface for R1 comes with an open-source license, which means developers and companies can access it for free. The company also provides services like mobile application and API access for its AI model.

Many have claimed that DeepSeek’s search feature is better than OpenAI and can challenge Google’s Gemini Deep Research. In fact, Perplexity, another rival, has started offering DeepSeek as a search option.

How Does DeepSeek Work?

DeepSeek’s approach is different from OpenAI. It uses a mixture-of-experts (MOE) architecture, where tasks are computation resource-specific. The model has multiple smaller networks for different inputs. This removes the need for involving all available computation resources and makes the process more efficient. It also relies on multi-head latent attention (MLA) to reduce memory footprint. Unlike traditional models, it doesn’t store large amounts of information. MLA compresses data into a more miniature “latent” representation, which increases efficiency.

DeepSeek also depends on reinforcement learning (RL) for reasoning to avoid the fragile nature of prescriptive datasets. Traditional models use supervised fine-tuning (SFT) where the models are trained on curated datasets for step-by-step reasoning, or chain-of-thought (CoT). However, DeepSeek hasn’t completely eliminated SFT. The team had to introduce some SFT at the final stage of the development.

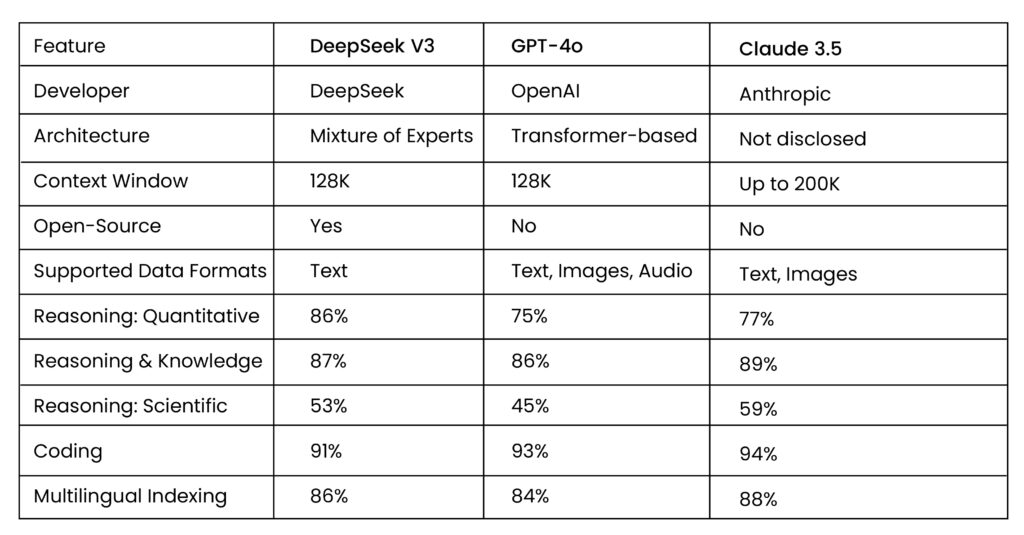

Where Does DeepSeek Stand Against GPT-4 and Claude?

The biggest players in the market at this point are OpenAI and Anthropic. Most of the companies are now using either GPT-4o or Claude 3.5. Here is a comparative study of DeepSeek with GPT and Claude.

Each has its distinct advantages. Startups can definitely benefit from DeepSeek as it will lower their AI software product development costs significantly. The reasoning and data privacy claims will also support startups. But if the requirements are for a multi-model, then GPT-4o and Claude are better options.

Open-Source vs. Proprietary AI: Key Differences for Startups

Startups mostly start small, and very few operate in well-regulated industries. What matters most to them is cost. Open-source has several advantages: it is free, allows developers to modify and share code—enhancing customization and innovation—and its performance in specialized use cases can rival proprietary models.

However, there are some factors startups should consider before adopting open-source models. Open-source may not offer comprehensive support or built-in compliance measures, which are typically provided by proprietary AI models. Proprietary models also offer ready-to-deploy solutions, making them highly effective in regulated industries or large-scale applications. Additionally, built-in security measures and vendor-managed updates make proprietary models a safer option.

Although proprietary models may have a higher initial cost than open-source AI models, their pre-configured setups and ongoing maintenance can save effort and resources in the long run. When it comes to scalability, user-friendliness, and integration with existing systems, open-source solutions may present challenges for startups over time.

How Startups Can Leverage DeepSeek

DeepSeek has solved one problem for startups by making itself easily available to them. Now startups need to develop strategies where they can make the most out of it.

Product Development:

Minimal investment is a major priority for startups. Early-stage startups often struggle with funding and open-source AI models like DeepSeek is a lucrative option for them. Its model distillation is particularly beneficial, as it enables the creation of powerful AI models with lower computational requirements.

The emphasis of DeepSeek AI model on transparency can accelerate the decision-making process by providing insights into how its models arrive at conclusions. This is especially crucial for regulated industries such as healthcare and finance.

The choice of an AI model affects investment. Open-source models like DeepSeek-Coder (under the MIT license) allow cost-effective and flexible commercial and research applications, reducing financial barriers for AI adoption.

Automation & Efficiency:

Like other LLMs, DeepSeek can automate tasks like document summarization, data analysis, email drafting, and code generation and reduce the time spent on repetitive manual work. DeepSeek-V3 model has high inference speed and outperforms OpenAI in mathematical reasoning and real-time problem-solving. OpenAI, on the other hand, is resource-intensive.

DeepSeek’s hardware and system-level optimizations are top-notch. Its memory compression and load balancing techniques maximizes efficiency. It uses PTX programming instead of CUDA, which provides better control over GPU instruction execution. The DualPipe algorithm has helped DeepSeek improve communication between GPUs, enabling more effective computations during training.

In manufacturing, DeepSeek can adjust parameters and predict maintenance in real time. In customer service, DeepSeek can be transformative as it can be quick and accurate in providing responses and solving queries.

Scalability:

Open-source large language models (LLMs) like DeepSeek help startups scale their AI solutions as they grow. DeepSeek uses a hybrid architecture that combines Mixture of Experts (MoE) and Dense models. This makes it efficient for different types of tasks. Simple tasks use fewer resources, while complex ones get more computing power. This approach reduces costs but doesn’t compromise with high performance. For example, a startup creating an AI-powered writing tool can start with a small model. As demand increases, it can expand to handle real-time text summarization, multilingual content, or personalized recommendations. The model’s flexibility allows businesses to scale without facing technical limitations.

DeepSeek for startups with specific AI needs is a great option as it allows companies to fine-tune and customize their models. Unlike cloud-based solutions that come with high costs and limited customization, open-source LLMs give businesses more control and flexibility. This makes AI more affordable and effective.

Data Privacy & Control:

Self-hosted AI models give startups full control over their data. This improves privacy and security, especially for industries handling sensitive information. Finance, healthcare, and legal sectors must protect user data and follow strict regulations. A health-tech startup using AI for medical diagnostics can run models on its own servers. This ensures that patient data stays private and does not get exposed to external cloud services. It also helps meet compliance standards like HIPAA or GDPR.

Self-hosting also allows companies to strengthen security by customizing protections. It also allows businesses to audit and upgrade security without relying on third-party providers. Self-hosted AI is now a better choice for businesses that need full control over their information to adhere to stricter data privacy laws introduced by governments.

Challenges and Considerations

DeepSeek cost is not the only factor that has raised eyebrows of the pundits. There are some red flags and challenges as well that startups must consider before adopting DeepSeek.

Computational Requirements:

Satya Nadella, in one of his recent tweets, mentioned Jevons’ Paradox, which could pose a challenge for DeepSeek. Energy-efficient technologies reduce costs, which in turn drive increased consumption, ultimately diminishing overall energy efficiency. DeepSeek AI model has the potential to fall into this trap. However, it also has the ability to be transformative.

DeepSeek has already demonstrated its capabilities by achieving state-of-the-art performance without relying on industry-defying hardware. The company has challenged traditional approaches to AI model development, and this might mark the beginning of its efforts to outpace the paradox.

However, deploying advanced AI models requires robust infrastructure. Startups may encounter challenges related to computational power and storage. For example, deploying a 70B-parameter LLM model requires approximately 70GB of GPU memory. This underscores the need for strategic planning to optimize resources.

Model Fine-Tuning:

Startups don’t start with a great data bank. DeepSeek can work with less amount of data but that data has to be of good quality. Model fine-tuning requires data preparation to tune the framework for specific task. Now to make DeepSeek work, startups must have a certain level of expertise. Either, they can invest in that expertise or they can acquire it from vendors for model optimization.

Unsloth is an optimization framework that can accelerate training and reduce memory consumption. It works well with LoRA (Low-Rank Adaptation) and GRPO by ensuring efficient use of GPU resources and fine-tuning on consumer-grade hardware. Models can also be fine-tuned with Supervised Fine-Tuning (SFT) and Hugging Face datasets.

Security & Ethical Considerations:

AI has always been at the center of controversy due to data bias. OpenAI, Google, Anthropic, and others work hard to reduce this bias and make AI more reliable. Is DeepSeek safe? This is one question that is bothering the AI community. Well, DeepSeek’s search engine appears to be clearly biased. User location and prompt language significantly impact the results. Recently, South Korea’s National Intelligence Service (NIS) reported that when they asked DeepSeek about the origin of Kimchi in Korean, it responded with Korea. However, when the same question was asked in Chinese, the answer was China.

Open-sourcing comes with a problem—anyone can inspect and modify the architecture. The same holds true for DeepSeek’s R1. Its pre-trained models can generate politically skewed views if trained on biased data. Additionally, DeepSeek’s core relies on model weights, which, if tampered with, could lead to data leaks.

DeepSeek collects a wide range of data, including user inputs, device information, and keystroke patterns. It stores this data in China, which is where concerns arise. The Chinese government has access to data from its companies, making it potentially vulnerable. At the same time, DeepSeek is banned in countries like Australia and is facing regulatory challenges in France, South Korea, and Ireland. To handle this, human supervision is crucial. Some hard checks to stop showing unnecessary outputs can also work.

Key Considerations for Startups

- DeepSeek is one of the many innovations happening in the AI landscape. Entrepreneurs should make sure they have enough in their experiment pipeline and they are agile enough to experiment with new tools.

- The rise of open-source models challenges the dominance of proprietary solutions. As more startups and enterprises adopt open-source AI, there may be a significant shift towards more collaborative and transparent AI development practices.

- DeepSeek doesn’t consume much electricity. Tech leaders, who are in favor of green computing, may look at it as a useful resource.

- Cheaper inference cost can trigger wider adoption and increase competition dynamics. Decision-makers should make strategies accordingly to stay abreast in the market.

- Cost cutting should not be the decision-maker. Businesses should make their innovation AI-driven. The impact radius should cover product development, customer personalization and other new services.