After years leading QA teams, I know writing test cases and UI automation scripts eats up more time than it should. AI tools like ChatGPT and Gemini can help, but most companies won’t even touch them because of concerns over data confidentiality. And rightly so. Passing sensitive test data to third-party platform is a risk not worth taking.

That’s the reason I approached self-hosted open-source LLMs. Models such as DeepSeek, Meta’s LLaMA, Mistral, or Qwen, if used locally with Ollama, keep your test data private and increase productivity.

In this guide, I’ll show you how to set up DeepSeek on your local machine, use it to generate manual test cases for different scenarios, and even create UI automation scripts using Playwright. You don’t require any prior knowledge of LLM’s to get started.

At the end of this article, you’ll be able to incorporate them in your QA process with ease. But, before we begin, let us briefly look at why a self-hosted LLM is such a powerful tool in your QA arsenal.

Why use a self-hosted LLM for QA?

Self-hosted LLMs bring security, flexibility, and productivity to QA teams, especially when cloud-based tools like ChatGPT or Gemini aren’t an option due to privacy and security concerns. So, below are some reasons why this actually makes sense:

- Data confidentiality – No making external API calls, assuring that your test data stays local.

- Faster test case writing – High-quality test cases are generated within seconds.

- Automated UI test scripts – Generate Cypress, Playwright, or Selenium scripts with AI assistance.

- Customizable & offline – Works without any Internet connections; you have full control over data.

Setting up a self-hosted LLM for QA test generation

Let’s walk through the steps required to set up a self-hosted LLM. I will be using DeepSeek-R1 (1.5B) model here. Although you can switch to higher models for better response quality.

Step 1: Prerequisite

Before we proceed further, ensure that your machine meets the following minimum requirements.

Operating systems: Windows, macOS, or Linux

CPU: At least a modern multi-core processor

RAM: Minimum of 8GB (Recommended: 16GB+)

Disk space: 20GB free minimum;

GPU: optional (but for better performance, an NVIDIA GPU with 6GB+ VRAM is suggested)

Step 2: Install Ollama

Let’s get our LLM running now! Ollama is an easy-to-use tool for running LLMs locally. You can download it from here and install it on Mac/Linux/Windows.

Step 3: Install dependencies

Next, we need to set up Python. If you haven’t installed it yet, just follow these simple steps to get it working.

Step 4: Install packages

Now, let’s install the necessary packages. To interact with Ollama’s local API, we’ll need the requests library. Just run the following command in your terminal:

pip3 install requests

Step 5: Download & run DeepSeek model

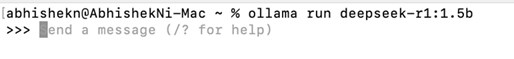

Once you have Ollama installed, pull the DeepSeek-R1 (1.5B) model by running this command in your terminal:

ollama run deepseek-r1:1.5b

Step 6: Checking installed models

To check which models you have downloaded to your local machine, you can run the following command:

ollama list

Note: To stop the model, run Ctrl+d in your terminal

With this, the set-up of a self-hosted LLM on your local machine is now complete!

We can now explore how to interact with the models and easily generate test cases.

Interacting with a self –hosted LLM for QA test generation

Loading DeepSeek-R1 in python locally

Once Ollama is installed and the deepseek-r1:1.5b model is downloaded, you can use Python to interact with it programmatically.

Write the following script in any code editor (like VS Code, PyCharm etc) that sends a prompt to the locally hosted deepseek-r1:1.5b model and retrieves AI-generated test cases.

import requests

OLLAMA_API_URL = "http://localhost:11434/api/generate"

MODEL_NAME = "deepseek-r1:1.5b"

def generate_test_cases(prompt):

payload = {

"model": MODEL_NAME,

"prompt": prompt,

"stream": False # Set to True if you want streamed responses

}

response = requests.post(OLLAMA_API_URL, json=payload)

if response.status_code == 200:

return response.json()["response"]

else:

return f"Error: {response.status_code}, {response.text}"

# Example Prompt for Login Page Test Cases

prompt_text = "Generate functional, negative, and boundary test cases for a login page with username and password fields."

test_cases = generate_test_cases(prompt_text)

print("Generated Test Cases:\n")

print(test_cases)

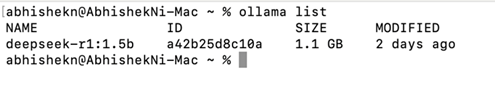

Executing the script

Now that we have our script ready, let’s run it step by step.

- Save the script as generate_test_cases.py

- Open the terminal and navigate to the directory where your script is saved.

- Set up and activate a virtual environment by Running the below commands:

- python3 -m venv .venv

- source .venv/bin/activate # For Mac/Linux

- (For Windows, use: ..venv\Scripts\activate)

- Run the below script to generate test cases:

- python generate_test_cases.py

You should now see AI-generated test cases in your terminal.

Generate manual test cases

Once your model is up and running, you can start generating detailed manual test cases effortlessly. Let’s try it out with an example!

Example 1: Login page test cases

Prompt:

“Generate functional, negative, and boundary test cases for a login page with username and password fields.”

When you send this prompt to the DeepSeek-R1 (1.5B) model via your script mentioned above, it will generate structured test cases for you.

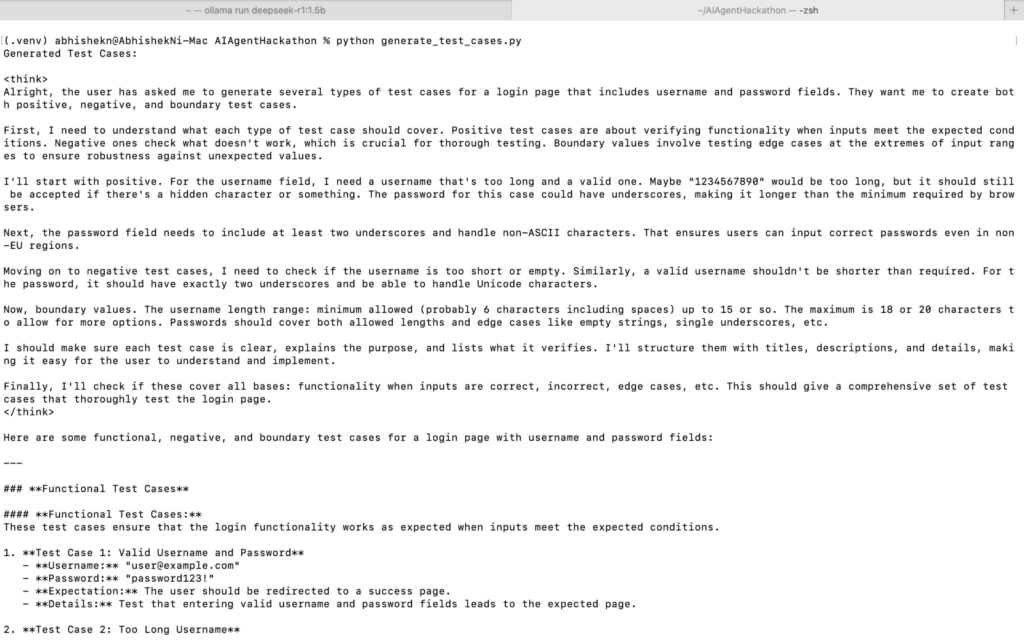

Let’s see how the AI-Generated Test Cases look in the terminal:

1. Functional test cases:

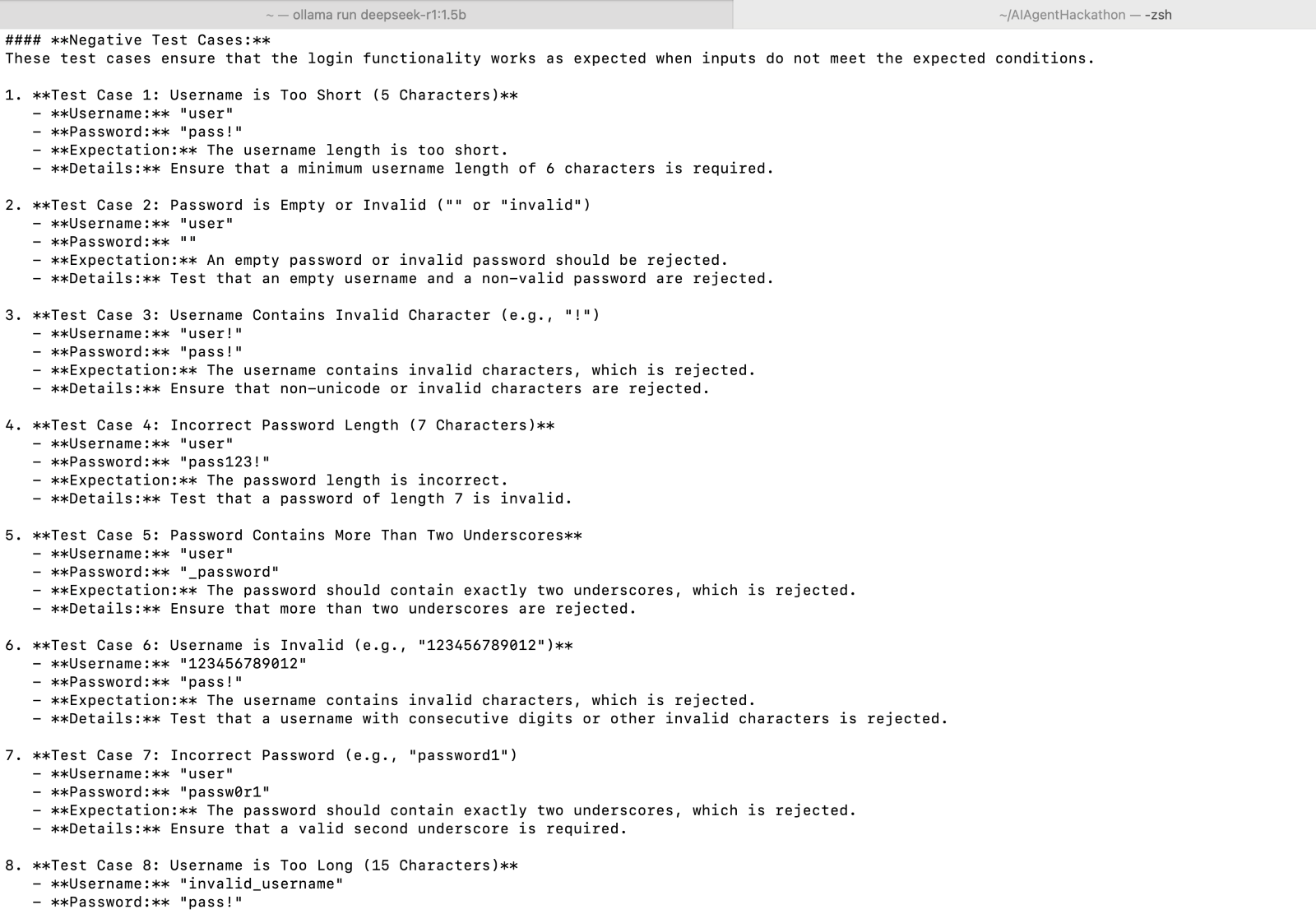

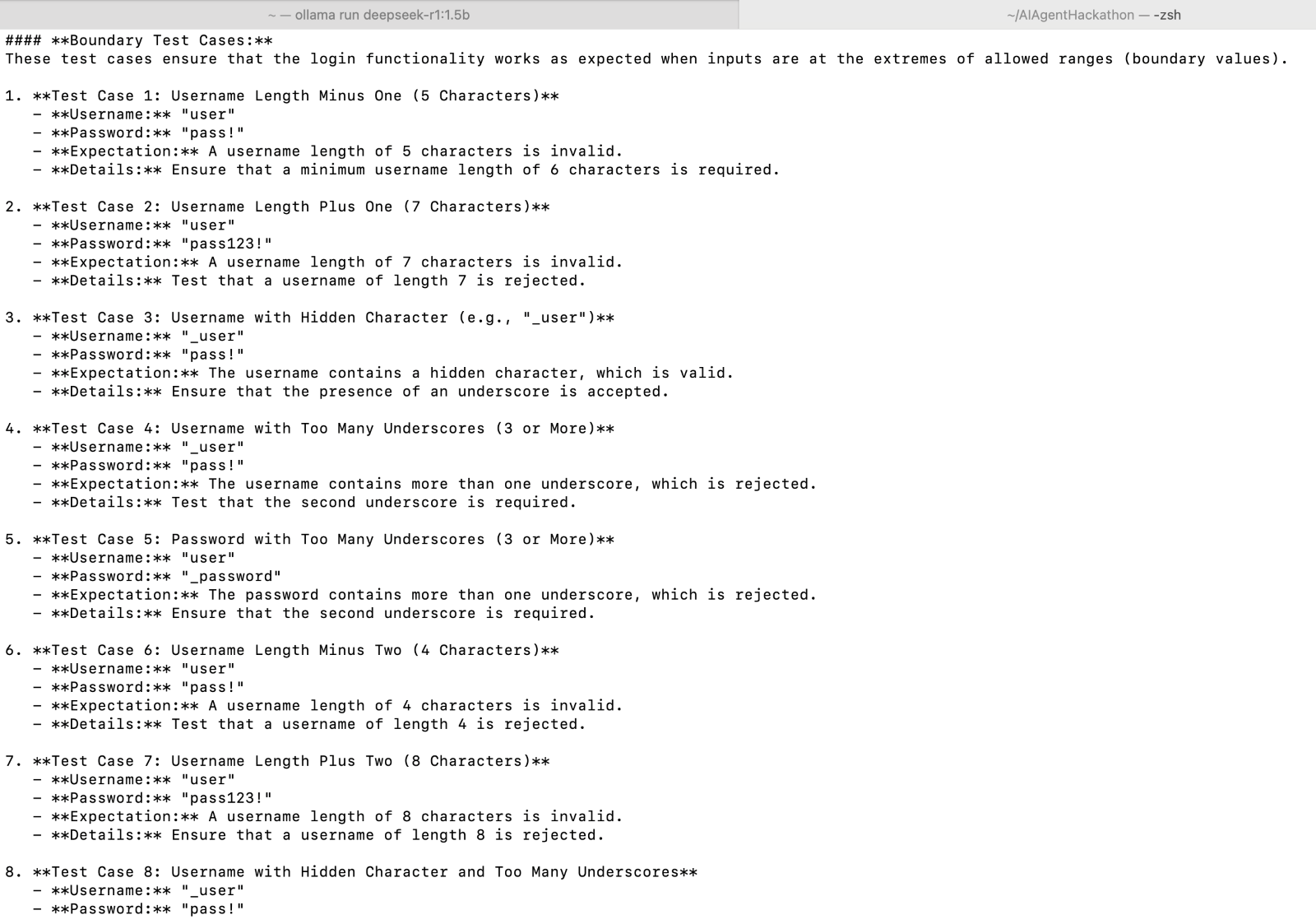

2. Negative test cases:

3. Boundary test cases:

Generate UI automation test

LLMs can also help generate UI automation scripts, saving time on writing repetitive test cases manually. Let’s see how we can use DeepSeek-R1 to create an automation script.

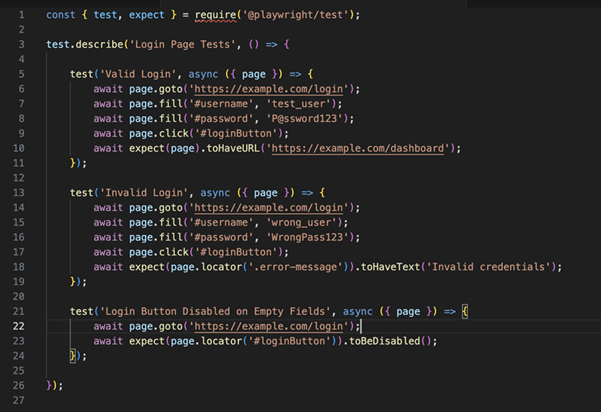

Example 2: Playwright script for login page

Prompt:

“Generate a JavaScript Playwright script to automate a login page test with valid and invalid credentials.”

When we send this prompt to the LLM, it will generate a structured Playwright script that can be directly used for automation. Let’s see it in action!

AI-Generated playwright script:

Enhancing LLM responses for QA tasks

To get the best test cases and automation scripts from LLMs, you can refine their responses using prompt engineering techniques. Here’s how:

Specify input formats

Clearly define the expected structure in your prompt. Example: “Generate test cases in a tabular format with columns: Test ID, Scenario, Steps, and Expected Result.”

Include business logic

Make LLMs aware of domain-specific rules to generate better test cases. Example: “Consider banking regulations while generating test cases for fund transfers.”

Iterate for improvements

If the output isn’t perfect, refine your prompt or provide feedback. Example: “Regenerate test cases, ensuring each scenario covers edge cases and security validations.”

Final Tip: Experiment with different phrasing and constraints to fine-tune responses for QAefficiency!

Key Challenges and considerations

Self-hosted LLMs do offer great flexibility and security, but there are important challenges and considerations that need to be taken care of.

Hardware and performance

- Requires at least 8GB RAM; larger models need GPUs.

- Performance may be slow on regular laptops.

Accuracy and output quality

- LLMs may generate generic or incorrect test cases—manual validation is needed.

- Better prompts = Better test cases (explicitly request structured output).

Formatting and parsing issues

- LLMs may not always return clean tables—parsing functions help.

- Eg: Specify “ID | Scenario | Expected Result” format in the prompt.

Context limits and large inputs

- Small models forget long inputs—break large test cases into smaller chunks.

- Consider higher models (7B+) for complex scenarios.

Integration and automation

- Export test cases to Jira, Zephyr, Xray for tracking.

- Can automate UI test script generation for Cypress, Playwright, Selenium.

Security and ethical compliance

- Never train LLMs with sensitive or proprietary data.

- Ensure AI-generated test cases meet security guidelines.

Conclusion

Self-hosted Large Language Models (LLMs) like DeepSeek-R1 (1.5B) present a valuable alternative for QA teams who are bound by company policies or regulated environments to not use cloud-based AI tools. But getting the best out of them demands meticulous prompt engineering, rigorous validation, and diligent resource planning.

When you use DeepSeek-R1 (1.5B) in Ollama, you’re preparing yourself for success. For instance, you can automate the generation of manual test cases, which can save hours of work every week. You’ll also be able to generate UI automation scripts in a matter of seconds, leaving less room for hours of coding. And perhaps most notably, all of this happens locally, so your data remains secure and confidential.

Whether new to QA or a veteran of the field, self-hosted LLMs can really enhance your workflow. The best part is they do all this while keeping your data private and secure. You can explore this technology and see how it can revolutionize your quality assurance procedures.